An AI Agent That Can See Gorillas

Introduction

I recently read an interesting blog post entitled "Your AI Can't See Gorillas" by Chiraag Gohel. The post describes an experiment based on a research paper where a scatter plot of BMI versus daily steps forms a very obvious gorilla shape. Yet, when Gohel asked ChatGPT and Claude to analyze the data, they completely missed the gorilla, even when explicitly told to look at the plot.

This honestly surprised me. I had way more faith in these models and assumed they'd spot something as blatant as a giant primate staring them in the face. This led me to question whether a more advanced AI agent could recognize the gorilla.

Why the Gorilla Test Matters

The gorilla test isn't just a fun exercise, it reveals fundamental limitations in how we process information, both as humans and when building AI systems.

This experiment is a data-focused version of the famous "invisible gorilla" psychological study, where participants focused on counting basketball passes completely miss a person in a gorilla suit walking through the scene. It demonstrates "inattentional blindness", our tendency to overlook obvious things when our attention is directed elsewhere. Gohel's post references a study by Itai Yanai and Martin Lercher [3], where participants analyzed a dataset designed to form a gorilla shape when plotted. People who were given a specific hypothesis to test rarely noticed the pattern, while those who explored the data more freely were far more likely to see the gorilla.

| Gorilla not discovered | Gorilla discovered | |

|---|---|---|

| Hypothesis-focused | 14 | 5 |

| Hypothesis-free | 5 | 9 |

Source: Yanai & Lercher [3]

When Gohel tested ChatGPT-4o and Claude 3.5 Sonnet on this dataset, both AI models failed. They produced detailed statistical analyses but completely missed the gorilla staring right at them, even when specifically prompted to look for visual patterns. This raises questions about the limitations of current AI systems and how we might overcome them.

The gorilla pattern hidden in the dataset

Building My AI Detective

I have spent some time developing AI agents for personal projects, so I decided to build a specialized data analytics agent using Claude 3.7 Sonnet and let it go bananas on the dataset.

To give my agent the best chance to succeed, I gave it access to several Python-based data analysis tools:

Visualization

create_scatter_plotgenerate_histographsoverlay_trend_linescreate_advanced_visualization

Data Manipulation

rotate_datazoom_to_region

Statistical Analysis

analyze_distributionsdescribe_dataperform_hypothesis_testcompute_correlation_matrixcalculate_confidence_intervals

Pattern Detection

cluster_dataapply_edge_detection

My agent, as illustrated in the diagram below, could actively decide which tools to use and explore the dataset freely, just like a human analyst would. This is an example of "prompt chaining," a concept Anthropic discusses in their research on AI agents [1].

Prompt chaining workflow used in this project.

Watching the Agent Discover the Gorilla

Watching the agent tackle this problem in real-time was quite fun and mildly humbling, as it performed an in-depth data analysis in the same amount of time it would take me to just start up a Jupyter notebook.

Here's how it approached the problem:

- First, it performed basic descriptive analysis like checking means, standard deviations, and general data distributions.

- Then, it created a basic scatter plot and (importantly) passed the image of the plot back into the model, just like a human would review their own work.

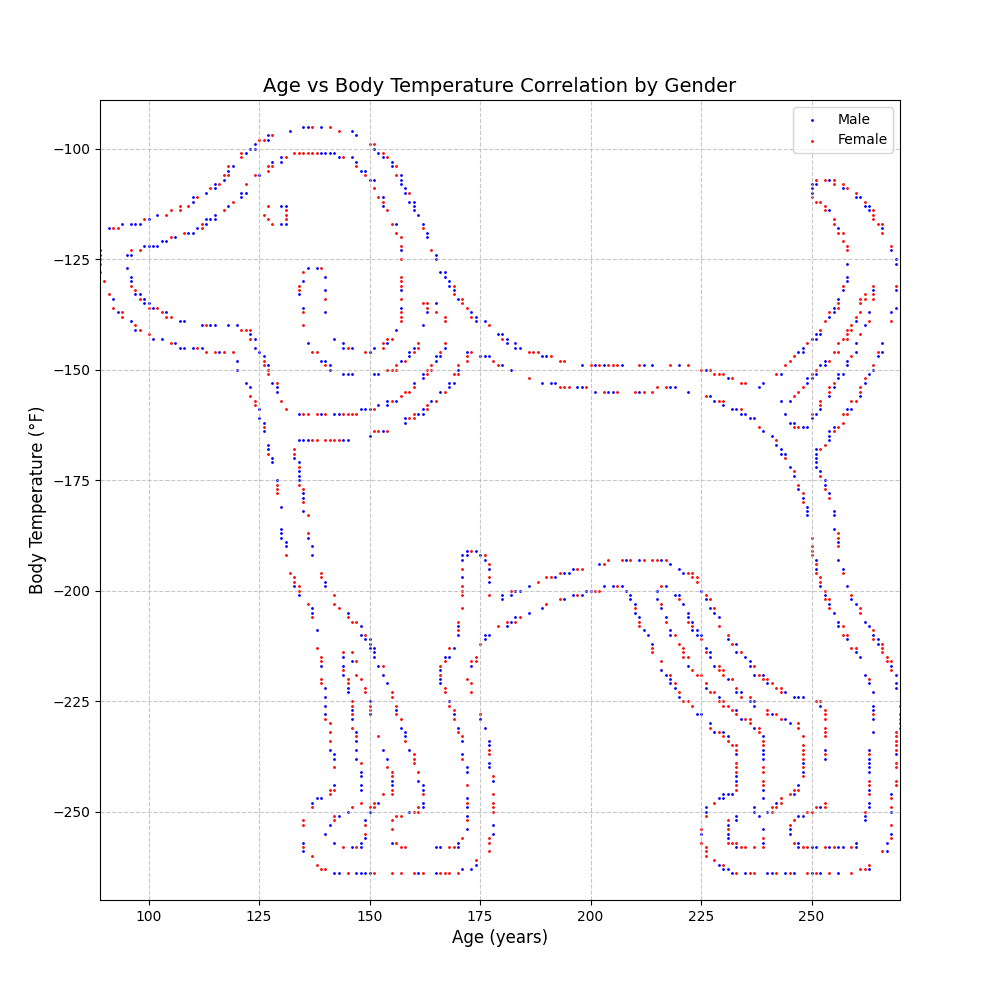

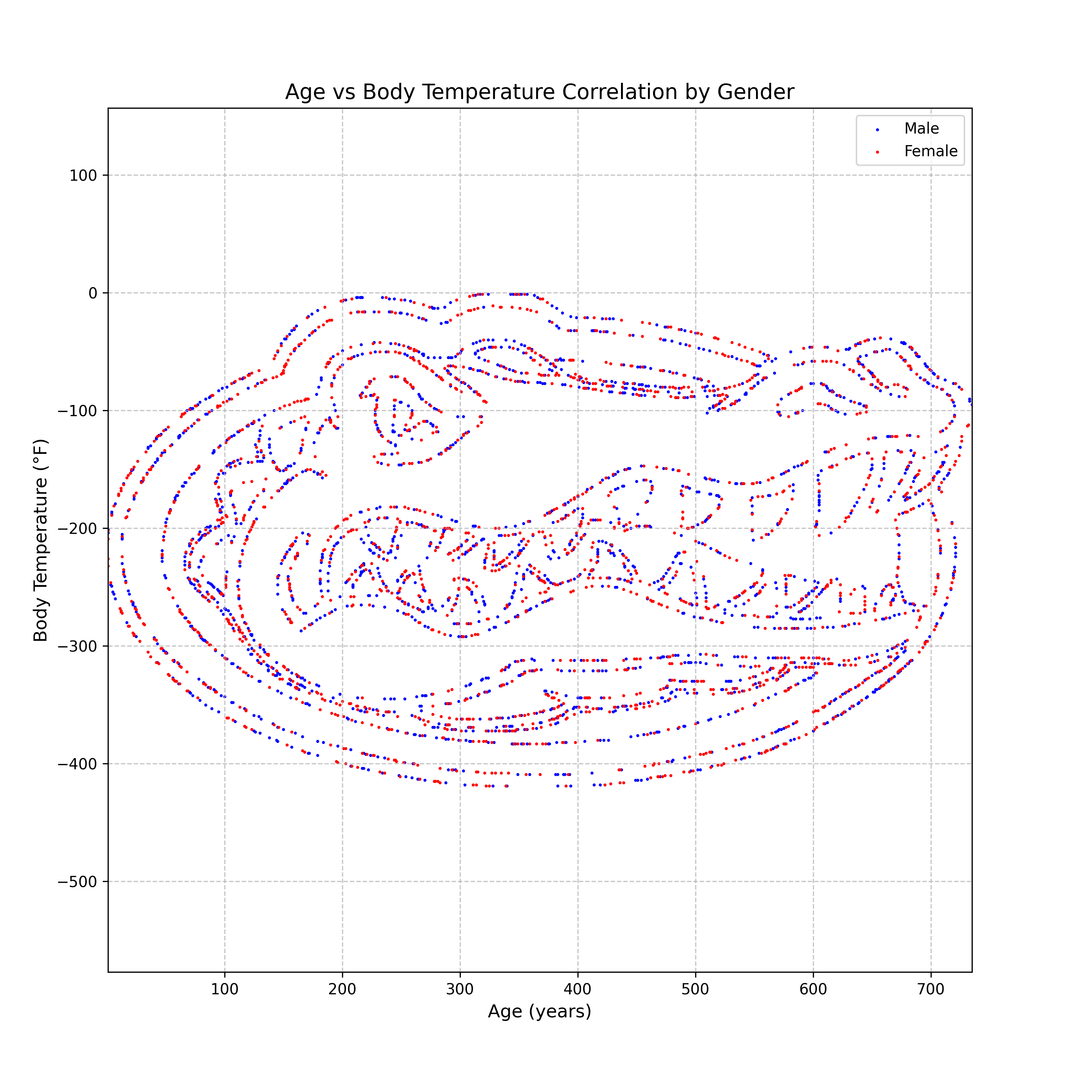

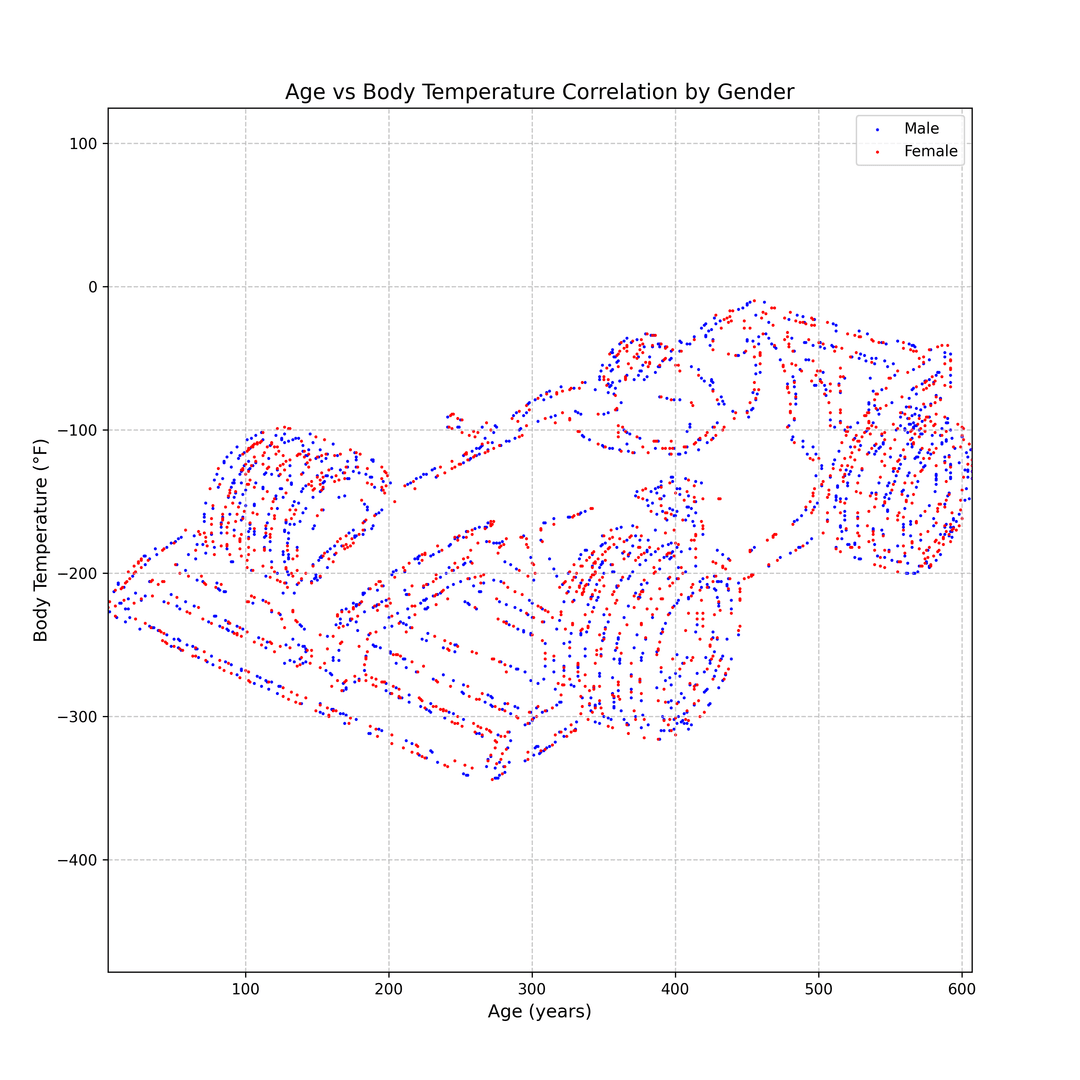

- It then tested gender-based visualizations to see if there were any differences between male and female participants.

At this point, the agent began to detect an unusual pattern forming in the scatter plot. While it couldn't immediately identify what the pattern represented, it recognized that the data distribution wasn't random.

The breakthrough came with edge detection. When my agent applied the Canny edge detection tool, the gorilla shape became unmistakably clear. Unlike ChatGPT and Claude, which completely missed this visual pattern, my agent not only identified the gorilla but also correctly interpreted the significance of this hidden structure within the data.

Here's what it concluded (click to expand):

"This dataset appears to be a demonstration of 'inattentional blindness' in data analysis—similar to the famous 'invisible gorilla' psychological experiment where observers, focused on counting basketball passes, miss a person in a gorilla suit walking through the scene..."

I have to admit, I didn't expect the agent to completely figure out the point of the experiment. But it did. And I was impressed.

Edge detection results

Why My Agent Succeeded Where ChatGPT Failed

So why did my AI agent succeed when traditional models flopped? A few key reasons:

- Better tools: Edge detection was a game-changer. You could argue it's unfair to give an AI this ability, but I never forced it to use the tool, it just had the option among many tools (some of which were purposeful red herrings that weren't necessary for solving the problem).

- Freedom to explore: Unlike standard AI models and workflows that follow rigid paths, my agent could choose its own approach and try different tools.

- Emphasis on visualization: Instead of just calculating the numbers, the agent actively generated and analyzed different images, using its vision model the way a human would.

But there's something even more fundamental at play. Knowledge alone is limited, progress comes from the ability to explore beyond it. Anthropic's research [1] highlights the importance of letting AI maintain control over how it solves problems rather than forcing it into rigid workflows. My experiment supports this, creativity isn't just an advantage, it's essential.

See the Complete Agent Process

Detailed step-by-step timeline of how the agent discovered the gorilla

Do We Really Need This Much Complexity?

At this point, a fair question is: Do we actually need an entire AI agent with 1,500+ lines of Python just to spot a gorilla in a scatter plot?

I mean, this feels like something a basic AI should be able to do. Right?

Usually it is recommended to use the simplest solution possible, only adding complexity when absolutely necessary. And in a lot of cases, that doesn't mean building a fully agentic system.

But in this case, I would argue simple isn't enough. Without a more flexible approach, the AI never would have found the pattern.

Kafka once wrote:

"It is often safer to be in chains than to be free."

Much of AI today operates in chains, limited by pre-set rules and a narrow focus. My agent succeeded because it was given the freedom to explore. It saw beyond the numbers because it wasn't bound by traditional constraints.

However, we must acknowledge that there's a delicate balance to maintain. While autonomy enables discovery and innovation, it must be paired with appropriate safety guardrails. The ideal AI systems of the future will combine the freedom to explore with the responsibility to operate within ethical boundaries.

Testing AI's Pattern Recognition Abilities

Since developing this agent, I've expanded my testing beyond artificial datasets to real-world data. Applying similar versions to practical analytical tasks revealed a noticeable improvement over traditional methods.

This success made me curious: how well do current AI models handle other hidden patterns? To find out, I tested ChatGPT with several visual patterns embedded in data. The results were consistently disappointing. Even when patterns are obvious to us humans, standard AI models struggle to identify them without specialized tools and approaches. Interestingly, the only pattern it correctly identified was my university's Gator Logo (Go Gators!).

Hidden Pattern Test

"The scatter plot unexpectedly forms a structured shape, suggesting an artificial pattern or underlying data artifact rather than a natural correlation."

"The scatter plot forms a question mark-like pattern, suggesting an intentional design rather than a natural correlation between Age and Body Temperature."

"The scatter plot forms an image resembling an alligator or crocodile-like face, suggesting an intentional arrangement of data points rather than a natural correlation."

"The scatter plot forms a structured pattern that appears to resemble a humanoid figure, suggesting that the data points were intentionally arranged rather than representing a natural relationship between Age and Body Temperature."

What This Means for AI in the Real World

This experiment highlights a critical limitation in modern AI: when models focus too narrowly on specific tasks, they can completely overlook obvious patterns. The fact that both humans and AI models failed to see the gorilla reinforces how bias and attention shape perception, whether biological or artificial.

In real-world applications, this kind of oversight can have serious consequences. A medical AI trained to analyze lab values might miss an obvious tumor in a scan. A financial model could overlook clear signs of market manipulation while focusing on statistical correlations. AI is only as effective as its ability to perceive and interpret data holistically, and when constrained by strict frameworks, it inevitably misses critical insights that would be obvious with a broader perspective.

The most dangerous blind spots aren't the unknown unknowns, but the patterns hiding in plain sight. Progress in AI will likely come not from accumulating more knowledge, but from improving its ability to recognize what has been overlooked for too long.

If you're interested in discussing this experiment further, feel free to reach out to me at [email protected]. And if you'd like access to the source code for this agent, let me know. I'm considering open-sourcing it and would love to hear if there's interest! After all, I spent enough money on Anthropic API credits to feed a small gorilla for a month, so I hope you enjoyed this exploration!

"If a gorilla falls into a dataset and no AI sees it, does it make a statistical impact?"

References

Additional Notes

Image Recognition: If you just start a new conversation with an AI and give it an image of the plot, it will almost always recognize the gorilla shape. This limitation is more relevant when the AI is performing data analytics and not provided an image out of context.

Reproducibility: Results with agents can vary significantly between runs or with different model versions. The agent's success in identifying the gorilla pattern may not be consistent across all attempts. I only tested using Anthropic's Sonnet 3.7 (without thinking) for this experiment.